Save time on documentation using Care2Report

Automated healthcare reporting

In healthcare, all care providers are required to accurately report patient information. Medical records are a critical component of a patient’s treatment.

As a primary communication tool between care providers it is the key to good patient care: for the patient, for colleagues, and for caretakers and family.

However, currently too much time is spent on documentation, especially on maintaining and recording patient medical information in the the Electronic Medical Record (EMR).

The Care2Report research program proposes an innovative solution to this problem: using speech recognition technology, action recognition technology, and language tools to automatically record and summarize an interaction between a care provider and a patient.

Our ambition is to automatically structure this information and prepare a report for the care provider, who only needs to approve the uploading in the EMR.

Speech Recognition

Technology

Automated transcription and interpretation of conversations with patients.

Action Recognition

Technology

Automated identification of care activities during patient consultations.

Semantic Interpretation

and Reporting

Ontological structuring of care concepts and syntactic templates for reporting.

Wireless Measurement

Recording

Automated transfer of data from measurement devices used in the consultations.

We aim to develop a generic hardware and software platform equipped with a non-intrusive device with camera, microphone and sensor technology. Speech recognition transforms medical conversations to text, action recognition captures treatments, and sensor data provide results of medical measurements. Semantic technology transforms these observations into meaningful information. Computational linguistic tooling enables automatic preparation of a report of a medical consultation, which can be checked and edited by the care provider before uploading in the EMR.

Our suite of prototypes is currently under development. As soon as we have a minimum viable product available, large-scale testing is the next step. This means we are in need of healthcare professionals involved in one-on-one consultations, who are open to innovation and who would like to try out and provide feedback to the system. Most importantly our algorithms require to be tested with real-life data from consultation conversations with their medical report.

For this, we are looking for cooperating partners. Do you want to contribute to this solution for administrative burden?

Please fill in the form under Contact.

Societal challenge:

Reduction of administrative load

Partly due to the large amount of time that is required for adequate documentation, care providers experience a high workload. Up to 40% of working hours is spent on administration tasks at the expense of time for patient care.

Our mission is to significantly reduce the amount of time spent on administration tasks.

an enrichment in person-to-person interaction in the professional domain

Automated reporting will give more time for enhanced communication and improved engagement.

More efficient healthcare will be more focused on the true nature of care

which is the general motivation of people working in this sector.

Scientific challenge:

Summarization of communication and actions

Our generic scientific vision is to use speech and action recognition technology to automate reporting. Throughout the development of our hardware and software platform we will face and overcome many scientific challenges related to i.a. the openness of the software architecture, interaction technology and semantic interpretation.

Mission

A pipeline of artificial intelligence and linguistic components for the summarization of communication and actions.

Our mission is to use speech and action recognition technology to give support for automated medical reporting. We aim to develop an integrated hardware and software platform for automated medical reporting. This process consists of four stages, which are explained and illustrated below.

Recording of audio, video and sensor input of medical interactions.

For this end existing standard speech and action recognition technology will be used. The multi-modal inputs of microphone, camera and wifi-eabledi measurement devices are recorded, processed and transferred for knowledge interpretation. The speech recognizer enables a full recording of the consultation with a careful distinction of the statements of the patient and the care-provider for reporting accordingly.

Several operations can be performed (e.g., auscultation, palpation, percussion) and instruments can be used to monitor a patient’s health (e.g. pulse measurement). Relevant information concerning treatments and measurements is converted to semantic data using action recognition technology (e.g., video, sensors). Note, that in a medical consultation not all relevant information is relevant for reporting (e.g. providing advice), and therefore omitted from the communication and further processing.

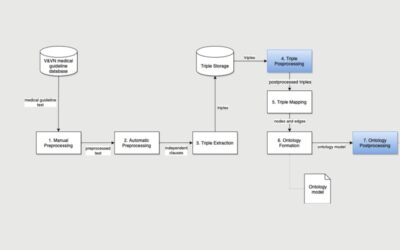

Medical guidelines are matched with the formal representation of conversation, measurements and treatments using semantic technology.

The raw data recorded during the medical consultation have to be formally represented using a knowledge graph representation formalism to allow further analysis.

This graph is matched with the so-called Patient Medical Ontology based on the guidelines for the disease at hand. This match converts the lay-man terminology to the professional medical terminology used in reporting. For example ear infection gets transformed into otitis externa.

Generation of healthcare reports based on conventions in the specific medical domain.

The knowledge graph of the interpreted consultation conversation has to be summarized in natural language text and put in the desired format. From the graph the essential elements for reporting are selected and prepared for the reporting algorithm.

Reporting conventions (SOEP, ATLS) and abbreviations (Rx – treatment; o.d. – once a day) are integrated in the text generation. We foresee standard abbreviation list for every healthcare domain.

Completion of the healthcare reports and uploading through a generic EMR-interface.

The generated report is checked by the care provider, who will always be responsible for the correct entry of the report in the EMR. The check of the generated report might be followed by some edits if necessary. It will only be uploaded after approval of the care provider. Specific actions (e.g. inspection of limbs) and measurements (blood pressure, heart rate) are also prepared for insertion in the appropriate item in the EMR record for the consultation. The generic EMR interface aims to insert in any type of EMR.

Mission: To serve professionals in society by architecting innovative systems of artificial intelligence and linguistic components for the summarization of communication and actions to reduce the administrative burden.

Follow our work

Scientific projects

PhD projects

Several projects are set up as a part of the overarching Care2Report program. This page provides information about these projects.

graduation projects

Graduation projects are carried out by bachelor and master students of Utrecht University. Click here to read about these projects.

News

Keynote Care2Report in Romania

Prof Sjaak Brinkkemper gave an invited keynote on the Care2Report research program at the 30th anniversary Information Systems Development conference in Cluj-Napoca, Romania. His keynote was entitled: "Reducing the...

Care2Report project awarded a subsidy

The Care2Report C2R-Building Blocks project was recently awarded a subsidy by the alliance of Eindhoven University of Technology, Wageningen University and Research, the Utrecht University Medical Centre Utrecht, and...

Contact

If you would like to stay up-to-date about the progress of our research, please subscribe.