Abstract

Documenting patient medical information in the electronic medical record is a time-consuming task at the expense of direct patient care. We propose an integrated solution to automate the process of medical reporting.

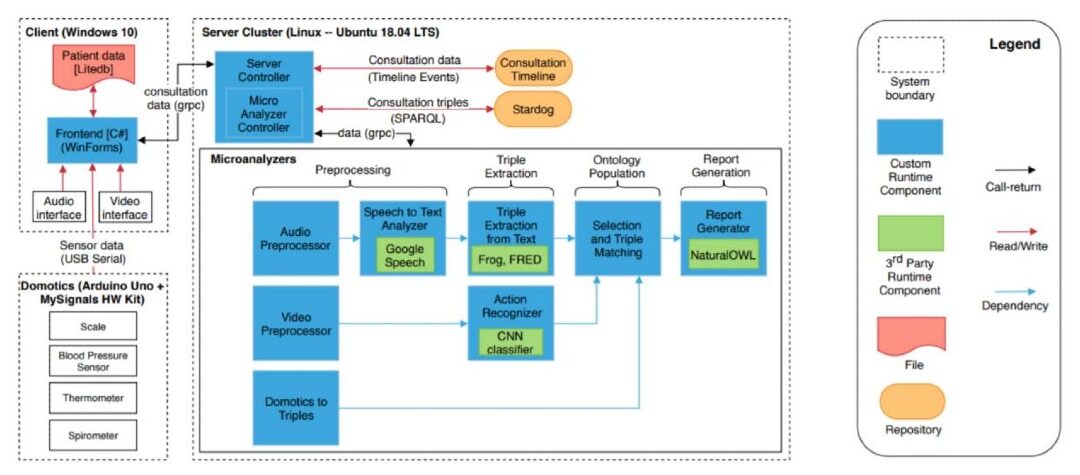

This vision is enabled through the integration of speech and action recognition technology with semantic interpretation based on knowledge graphs. This paper presents our dialogue summarization pipeline that transforms speech into a medical report via transcription and formal representation. We discuss the functional and technical architecture of our Care2Report system along with an initial system evaluation with data of real consultation sessions.

The regulation of medical consultations for some countries, such as the Netherlands, dictates the general practitioners to prepare a detailed report for each consultation, for accountability purposes. Automatic report generation during medical consultations can simplify this time-consuming procedure. Action recognition for automatic reporting of medical actions is not a well-researched area, and there are no publicly available medical video databases. We present in this paper Video2Report, the first publicly available medical consultancy video database involving interactions between a general practitioner and one patient. After reviewing the standard medical procedures for general practitioners, we select the most important actions to record, and have an actual medical professional perform the actions and train further actors to create a resource. The actions, as well as the area of investigation during the actions are annotated separately.

In this paper, we describe the collection setup, provide several action recognition baselines with OpenPose feature extraction, and make the database, evaluation protocol and all annotations publicly available. The database contains 192 sessions recorded with up to three cameras, with 332 single action clips and 119 multiple action sequences. While the dataset size is too small for end-to-end deep learning, we believe it will be useful for developing approaches to investigate doctor-patient interactions and for medical action recognition.